Evaluation of the Research Support Fund (RSF) - Final Report.

© Her Majesty the Queen in Right of Canada, represented by the Minister of Industry, 2015

CR22-111/2020E-PDF

978-0-660-35703-4

Produced by NSERC’s and SSHRC’s Evaluation Division and Goss Gilroy Inc.

PDF document

Table of contents

List of Tables

List of Figures

Executive Summary

About the Research Support Fund

Each year, the federal government invests in research excellence in the areas of health sciences, engineering, natural sciences, social sciences and humanities. This is done through its three granting agencies, CIHR, NSERC and SSHRC. With a few exceptions, federal government programs only provide support for the direct costs of research.

The RSF, formerly the Indirect Costs Program (ICP), is intended to reinforce the federal government’s research investment by helping institutions ensure that their federally funded research is conducted in world-class facilities, with the best equipment and administrative support available. Eligible institutions receive an annual grant through the RSF, calculated through a formula based on their federal research funding, to help pay for a portion of the central and departmental administrative costs related to federally funded research. Grants may be used to:

- maintain modern labs and equipment;

- provide access to up-to-date knowledge resources;

- provide research management and administrative support;

- meet regulatory and ethical standards; or

- transfer knowledge from academia to the private, public and not-for-profit sectors.

The RSF is an ongoing program with an annual allocation to eligible institutions. In 2017-18 the total funding available to institutions was $369,403,000.

About the Evaluation

The scope of the evaluation covered the five-year period from the last evaluation of the then ICP (2013-14 to 2017-18) and focused on the following four questions:

- How have changes in the research environment impacted the need for RSF?

- To what extent has the RSF contributed to effective use of direct federal research funding?

- Has the RSF continued to be delivered in a cost-efficient manner?

- How could performance information be collected (considering current challenges and barriers)?

The following lines of evidence were used to answer the evaluation questions: consultations with internal and external stakeholders (n=14); a review of key documents; a review of financial and administrative data; a website review of a sample of institutions (n=40); key informant interviews with a sample of institutional representatives (n=27); and data collected from institutions through an evaluation survey (n=97).

Conclusions and Recommendations

Evaluation Question 1: How have changes in the research environment impacted the need for RSF?

Funds for indirect costs of research help ensure that institutions can use direct research funds effectively. The Fundamental Science Review (2017) estimated that the actual indirect costs range from 40% to 60% of the value of research grants. The RSF program is intended to fund a portion of the indirect costs faced by institutions as a result of investments in direct research funding from the federal government.

As the federal government has continued investing in research at Canadian institutions, there continues to be a need for a federal contribution towards defraying indirect costs. From 2013-14 to 2017-18, there have been significant increases in direct research funding from the federal government. In response to this, the government has mirrored these increases with increases in the RSF fund. During this time period, RSF provided funding for indirect costs at an overall rate of 22% of the total value of direct research grants from the tri-agency. However, this proportion is higher for small and medium-sized institutions and lower for large, U-15 and non-U15 institutions. As a result, at the institutional level, the mean percent RSF represents of the value of direct research grants is much higher at 58%. This is because RSF represents a larger proportion of the value of direct research grants for the large number of medium and small institutions receiving funds.

In addition to the increases in direct research funding, increased requirements related to research integrity and ethics regulations have also contributed to increased indirect costs. The type of cost drivers identified is not exhaustive as the evaluation only briefly explored this topic.

Finally, it is important to remember that it was beyond the scope of the evaluation to assess the true indirect cost of direct federal research investments at the institutions and the adequacy of the RSF program’s contribution from the institutions’ perspective.

Recommendation 1: Continue to contribute financially to defraying the indirect costs associated with federal investments in academic research.

Evaluation Question 2: To what extent has the RSF contributed to effective use of direct federal research funding?

The RSF program has continued to be successful in contributing to defraying the indirect costs incurred by institutions as a result of federal investments in academic research. Immediate outcomes have been achieved, including investments in: research facilities; research resources; the ability to meet regulatory requirements; management and administration of the research enterprise; and transfer of knowledge, commercialization and management of intellectual property. What proportion RSF funds represented in relation to the institutions’ total eligible expenditures in each category could, however, not be determined.

Through interviews and surveys, institutions explained how these investments have contributed to the achievement of intermediate outcomes: well maintained and operated facilities, availability of relevant research resources, compliance with regulatory requirements and accreditation, effectively managed and administered research enterprise, efficient and effective knowledge mobilization and IP management. The examples from institutions provide evidence that RSF has made a partial contribution, but the evaluation was not able to quantify or assess the extent of this contribution beyond institutions’ own perceptions of the funding’s impact. Their perceived impact was very high and they reported that removing RSF funds would have a large negative impact on the research enterprise. Given the RSF program’s partial, albeit non-quantifiable, contribution to its intermediate outcomes, it can be inferred that the RSF program has also made a partial contribution to its final outcomes and the departmental result in SSHRC’s Departmental Results Framework. Specifically, RSF enabled institutions to effectively use federal research funding from the three granting agencies and thereby contributed to a strong university research environment. The majority of institutions echoed this by stating that they agree or strongly agree that RSF has contributed to the intended final outcome, sustaining a strong research environment at their institution. Again, the extent of that contribution could not be assessed as part of the evaluation.

As part of assessing the program’s performance, an inconsistency was noted between the logic model and how the program works in reality. Specifically, the strength of the causality between the program’s immediate and intermediate outcomes has been over-emphasized in the program’s logic model. RSF is a grant that is meant to offset a portion of the indirect costs related to federally funded research and is not designed to fully support specific activities or projects. While the contributory nature is consistent with how the program is described in its Terms and Conditions, further adjustments are required to help frame communication of the program’s goals, as well as to frame further evaluation and performance monitoring activities.

Recommendation 2: Refine the program logic model to make it clearer that the program is only expected to make a partial contribution to the expected intermediate and long-term outcomes.

Evaluation Question 3: Has the RSF continued to be delivered in a cost-efficient manner?

The RSF program has a low operating ratio which has decreased over time. The operating costs of the RSF were, on average, 13¢ for every $100 in grant expenditures. This suggests that the program has continued to be delivered in a cost-efficient manner. Concerns were raised, however, about the sufficiency of resources for program administration, making it challenging to allocate sufficient resources to change management, stakeholder engagement and improved performance monitoring. Concerns were also raised about the efficiency of the process used to calculate grant amounts, which relies on each of the three agencies to provide the amounts of direct funding to TIPS.

Evaluation Question 4: How could performance information be collected (considering current challenges and barriers)?

A goal of the evaluation was to better understand why institutions have had difficulty with past performance reporting requirements introduced by TIPS and to identify possible approaches for performance monitoring that would both be acceptable to institutions and meet the needs of program management and government accountability requirements. While the consultations with the institutions helped shed some light on the former, it proved to be more challenging to reach a consensus view of how performance information could be collected. The evaluation was able to suggest improvements to the existing reporting template based on what institutions indicated they could report on, but it is not certain that these improvements will meet the needs of program management and government accountability requirements.

Specifically, in the consultations with universities, the key reasons surfaced for why larger institutions, in particular, have previously resisted reporting on data intended to quantify the intermediate outcomes. Large and research-intensive universities most often reported pooling their funds with other operating funds before allocating them to sub-categories, thereby losing the ability to link particular expenditures with RSF funds beyond the five expenditure categories. While they understood that they are accountable for the public funds they receive, they were concerned about the reporting burden. They explained that detailed tracking would require costly changes to their current accounting systems and significant staff training. In addition, many of the indicators collected in various reporting tools did not resonate with them as valid measures of success. From their perspective, spending resources on tracking non-valid measures did not seem to improve accountability.

That said, the evaluation was able to identify and suggest some indicators that the current reporting template could use. That included asking institutions to identify how and where they planned to allocate funds in the eligible expenditure categories; indicate whether the RSF grant supported salaries in each of the eligible expenditure categories; and provide qualitative examples of ways in which the RSF contributed to the research environment within each of the eligible expenditure categories. Additionally, using a list of pre-established options, the program could ask institutions to indicate or approximate how RSF funds were used under each eligible expenditure category (the institution could check multiple options). This would provide a picture of investments made and could help track trends over time, without asking for specific metrics that would be of limited use.

Taking into consideration the findings from this evaluation, it will ultimately be up to program management to decide what kind of performance information they need institutions to provide to give them sufficient assurance of the program’s effectiveness. It will also need to be decided what incentives to provide—or requirements to impose—if the needs of program management go beyond what institutions have said they can easily report on (e.g., requiring more detailed expenditure information at the application stage or imposing requirements pertaining to tracking of RSF funds). The policy requirements for program performance reporting are contained in the Directive on Results and in the Policy on Transfer Payments. The directive places responsibility on program managers to ensure that valid, reliable and useful performance data are collected to manage their programs and to assess effectiveness and efficiency. However, the Policy on Transfer Payments outlines that such reporting requirements should be proportionate to the level of risks specific to the program, the materiality of funding and the risk profile of applicants and recipients. Further, the extent to which the current program design support required performance reporting should also be taken into account. For example, there could be an opportunity to explore alternative design options that are more conducive to linking expenditures to outcomes. In doing so, it will be important to keep in mind that institutions particularly appreciated particular features of the program (such as flexibility to move the funding between the five expenditure categories).

Recommendation 3: Implement institutional reporting that is appropriate for the contributory nature of the program, the risk associated with the program and the performance information needs of program management.

1.0 Introduction

This report presents key findings, conclusions and recommendations from an evaluation of the Government of Canada’s Research Support Fund (RSF) conducted in 2019-20.

1.1 Evaluation Background and Purpose

The Research Support Fund (RSF), previously called the Indirect Costs Program (ICP), is a tri-agency transfer payment program involving the Social Sciences and Humanities Research Council (SSHRC), the Natural Sciences and Engineering Research Council (NSERC) and the Canadian Institutes of Health Research (CIHR). The program is administered by the Tri-agency Institutional Programs Secretariat (TIPS), which is housed at SSHRC. A detailed description of the program can be found in Appendix A.

The purpose of the evaluation was to provide TIPS senior management with an assessment of the performance, relevance, efficiency, design and delivery of the RSF. The RSF evaluation has been conducted in compliance with the legislative and policy requirements outlined in the Financial Administration Act and the Treasury Board Policy on Results.

1.2 Evaluation Scope and Questions

The scope of this evaluation is the five-year period since the last evaluation of the ICP (2013-14 to 2017-18). The evaluation does not include an assessment of the Incremental Project Grants as this stream of the RSF was first announced in the Government of Canada’s Budget 2018. Based on consultations with key program stakeholders, it was determined that the evaluation should focus on the following four questions:

- How have changes in the research environment impacted the need for RSF?

- To what extent has the RSF contributed to effective use of direct federal research funding?

- Has the RSF continued to be delivered in a cost-efficient manner?

- How could performance information be collected (considering current challenges and barriers)?

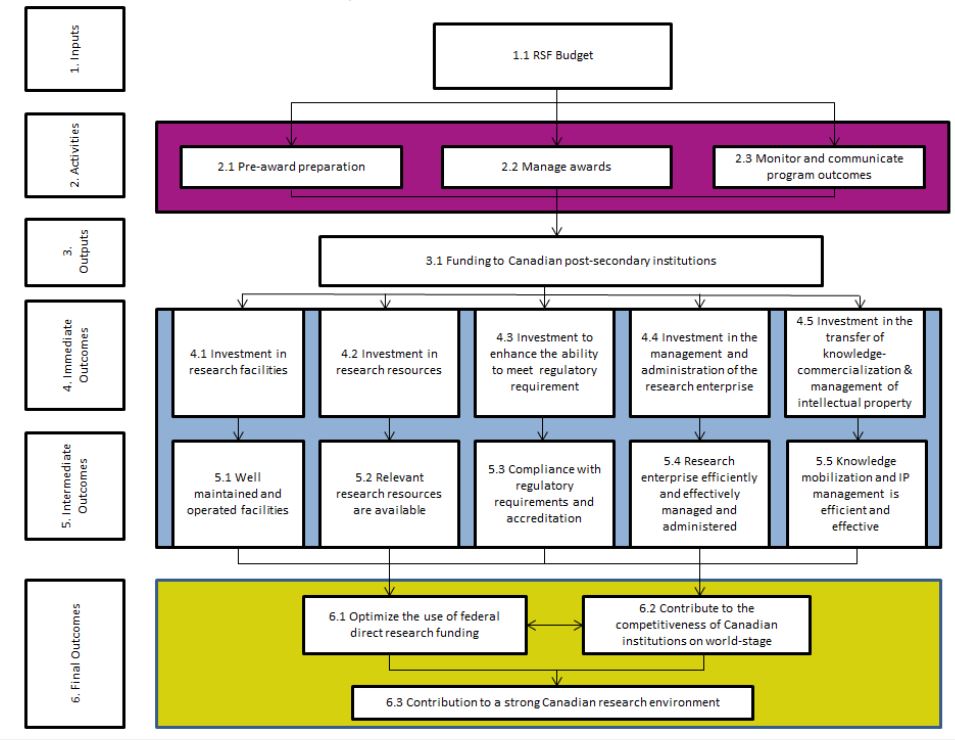

Effectiveness is measured in relation to the program’s intended outcomes as depicted in the logic model (see Appendix B). The evaluation focuses on the program’s immediate outcomes (i.e., investment in the five expenditure categories of research facilities, research resources, meeting regulatory requirements, management/administration of the research enterprise and transfer of knowledge—commercialization and management of intellectual property) and intermediate outcomes (i.e., well maintained and operated facilities, availability of relevant research resources, compliance with regulatory requirements and accreditation, efficient and effective management of research enterprise and efficient and effective knowledge mobilization and IP) associated with the five expenditure categories covered by the program.

Long-term outcomes (i.e., optimize the use of federal direct research funding, contribute to the competitiveness of Canadian institutions on the world-stage and contribute to a strong Canadian research environment) were not assessed directly because of expected limitations in quantifying RSF’s contribution. However, they were measured by proxy through the assessment of the achievement of immediate and intermediate outcomes—the extent to which immediate and intermediate outcomes would lead to the effective use of direct research funding. The evaluation also assessed changes in the research environment that might have had an impact on the continued need for the RSF since the last evaluation.

In addition to assessing relevance and performance, the evaluation also intended to support future performance reporting by helping program management gain a better understanding of the current challenges and barriers to collecting performance information from RSF recipient institutions and to help inform how performance information could be collected in future.

Equity, diversity and inclusion (EDI) impacts were considered when developing the evaluation survey. Interviews and the evaluation survey were used to seek feedback on any potential barriers to implementing EDI objectives as a result of the RSF eligibility guidelines. The assessment of EDI is considered under the intermediate outcomes (the research enterprise efficiently and effectively managed and administered) section of the report.

1.3 Evaluation Methodology

The following lines of evidence were used to answer the evaluation questions: consultations with internal and external stakeholders (n=14); a review of key documents; a review of financial and administrative data; a website review of a sample of institutions (n=40); key informant interviews with a sample of institutional representatives (n=27); and data collected from institutions through an evaluation survey (n=97).

1.4 Methodological Limitations

The methodology for this assignment presented a few limitations, outlined below:

- The website review and subsequent interviews were undertaken with a sample of institutions. While efforts were made to obtain a mix of institution sizes, types and range of RSF funding amounts, the evidence should not be considered representative of the entire population. To mitigate this limitation, the evaluation survey was administered to all institutions that received more than $25K in RSF funding in the previous year and extensive follow-up was undertaken to maximize the response rate.

- The response rate to the evaluation survey was 78% (including pilot testers). Most institutions were given limited time to complete the evaluation survey and little pre-notification of the type of information that would be requested. As a result, some of the records captured are only partially completed or were completed with placeholder information. As much as possible, the data were cleaned to include only valid, complete answers. As was the case with the previous outcome reporting tools, only institutions that had received more than $25K from RSF were included. Therefore, it would be prudent to not generalize these results to institutions that received $25K or less.

- The assessment of intermediate and long-term outcomes relies on self-reported data collected from institutional representatives through the survey. Self-reported data can introduce several biases, such as self-serving bias, recall bias and measurement error bias. To partly mitigate these biases, the data were cross-referenced with other lines of evidence where possible and removed from analysis if the data were contradictory; a short recall period was used; and questions from previously used data collection instruments were used where appropriate.

1.5 Structure of the Report

This report is broken down into sections, aligned to the evaluation questions. Section 2 presents the findings regarding relevance; Section 3 presents the findings regarding performance (including effectiveness and efficiency); and Section 4 presents the findings pertaining to performance reporting. Section 5 includes high level conclusions and the recommendations. The appendices present a program description (A), the program logic model (B) and a detailed description of the evaluation methodology (C).

2.0 Relevance

Conclusion:

Evaluation Question: How have changes in the research environment impacted the need for RSF?

Funds for indirect cost of research help ensure that institutions can use direct research funds effectively. The Fundamental Science Review (2017) estimated that the actual indirect costs range from 40% to 60% of the value of research grants. The RSF program is intended to fund a portion of the indirect costs incurred by institutions as a result of investments in direct research funding by the federal government.

As the federal government has continued investing in research at Canadian institutions, there continues to be a need for a federal contribution to defray indirect costs. From 2013-14 to 2017-18, there have been significant increases in direct research funding from the federal government. In response to this, the government has mirrored these increases with increases in the RSF fund. During this time period, RSF provided funding for indirect costs at an overall rate of 22% of the total value of direct research grants from the tri-agency. However, this proportion is higher for small and medium-sized institutions and lower for large, U-15 and non-U15 institutions. As a result, at the institutional level, the mean percentage of the value of direct research grants that RSF represents is much higher: 58%. This is because RSF represents a larger proportion of the value of direct research grants for the large number of medium and small institutions receiving funds.

In addition to the increases in direct research funding, increased requirements related to research integrity and ethics regulations have also contributed to increased indirect costs. The type of cost drivers identified is not exhaustive as the evaluation only briefly explored this topic.

Finally, it is important to remember that it was beyond the scope of the evaluation to assess the true indirect cost of direct federal research investments at the institutions and the adequacy of the RSF program’s contribution from the institutions’ perspective.

2.1 Program Rationale

The federal government has been providing direct research grants to postsecondary institutions since 1951. In the late 1990s, postsecondary institutions made the case that they could not continue to finance the indirect costs without jeopardizing their other roles, such as teaching. In response, Budget 2001 announced a one-time payment of $200 million to help alleviate the financial pressures associated with the institutional costs stemming from the increased federal funding for research. The Indirect Costs Program was made a permanent program in Budget 2003. The program was renamed RSF in 2014.

The RSF is intended to reinforce the federal government’s research investment by helping institutions ensure that their federally funded research projects are conducted in world-class facilities, with the best equipment and administrative support available. In other words, RSF subsidizes the indirect costs of research in Canadian postsecondary institutions and their affiliated research hospitals and institutes.

The allocation of RSF funds is achieved by using a progressive funding formula. This formula provides higher rates of funding for the institutions that receive the least amount of money from the federal research granting agencies. In this way, the RSF helps smaller institutions provide adequate support to their research programs and strengthen their research capacity.

The program has funded indirect costs over the years at a fairly consistent rate of about 22% of direct research funding. However, due to the progress funding formula, this proportion is higher for small and medium-sized institutions and lower for large, U-15 and non-U-15 institutions. As a result, at the institutional level, the mean percentage of the value of direct research grants that RSF represents is much higher: 58%. This is because RSF covers a larger proportion of the value of direct research grants for the large number of medium and small institutions receiving funds.. This is shown in Table 1 below (see also funding formula in Appendix A). According to the Fundamental Science Review in 2017, the actual indirect costs range from 40% to 60% of the value of research grants.

Table 1 RSF proportion of eligible direct research funding, average from 2013-14 to 2017-18

| Institution type |

RSF investment ($) |

Eligible direct funding ($) |

Percentage of direct funding |

Mean percentage across all institutions* |

| Small |

1,051,479.40 |

1,314,349.00 |

80.0% |

57.7% |

| Mid-size |

4,975,253.60 |

8,534,507.80 |

58.3% |

| Large |

32,280,043.00 |

72,965,109.20 |

44.2% |

| Non-U15 |

70,902,681.80 |

266,020,631.80 |

26.6% |

| U15 |

241,624,058.80 |

1,229,492,882.40 |

19.6% |

| Overall |

350,833,516.60 |

1,578,327,480.20 |

22.2% |

- |

*Mean, weighted for total number of institutions in each type.

Source: Administrative data

All institutions consulted confirm that the funding plays an important role in supporting their research capacity, especially given that few sources of indirect funding offer the same level of flexibility and the possibility to allocate funds to institutional operations.

2.2 Emerging Cost Drivers Associated with Federal Research Funding Agencies

Over the evaluation period, direct funding for research has increased, which has also driven up indirect costs. Looking at the broader research environment, there have been increases over time in the amount universities expended to research. The five-year program evaluation (2008-09) indicates that universities expended $5 billion in research in the late 1990s, which grew to $10B in 2007-08. Increases in federally funded university research and training were also shown to have increased by 150% in the same timeframe. (From 2007 to 2012, 47% of university-sponsored research funding came from the federal government.) During this five-year evaluation period, the increases in eligible direct funding have been matched by increases in the RSF as shown in table 2.

Table 2 Eligible direct funding and indirect investment through RSF

|

2013-14 |

2014-15 |

2015-16 |

2016-17 |

2017-18 |

2013-14 to 2017-18 (Annual average) |

| Eligible direct research funding (average of 3 preceding years) |

$1,549,130,017 |

$1,565,934,727 |

$1,576,188,309 |

$1,595,283,367 |

$1,605,100,982 |

$1,578,327,480 |

| Total RSF awarded |

$332,403,000 |

$341,403,000 |

$341,403,000 |

$369,555,583 |

$369,403,000 |

$350,833,517 |

| Average reimbursement rate |

21% |

22% |

22% |

23% |

23% |

22% |

Source: Control sheets 2013-14 to 2017-18

According to the consultations done with internal and external stakeholders during the planning phase of the evaluation, in addition to the increases in direct research funding, increased requirements related to research integrity and ethics regulations have also contributed to increased indirect costs. The need for digital research management infrastructure was also described as a cost driver, but did not stem directly from the granting agencies. It should be noted that the list of cost drivers identified is not exhaustive as the evaluation only briefly explored this topic.

Finally, it should be noted that institutional representatives interviewed highlighted that the flexibility of the RSF funding—in terms of where it is allocated—was important to being able to respond to institution-level cost drivers, needs and priorities. Institutions had been changing where they allocated the funds over the years. For example, it had allowed for more concentrated administrative support in a particular area and building of research agendas.

3.0 Performance

3.1 Effectiveness

Conclusion:

Evaluation Question: To what extent has the RSF contributed to effective use of direct federal research funding?

The RSF program has continued to be successful in contributing to defraying the indirect costs incurred by institutions as a result of federal investments in academic research. Immediate outcomes have been achieved over the evaluation period, including investments in: management and administration of the research enterprise ($598 million); research facilities ($533 million); research resources ($343 million); the ability to meet regulatory requirements ($151 million); and transfer of knowledge, commercialization and management of intellectual property ($102 million). What proportion RSF funds represented in relation to the institutions’ total eligible expenditures in each category could not be determined, however.

Through interviews and surveys, institutions explained how these investments have contributed to the achievement of intermediate outcomes: well maintained and operated facilities; availability of relevant research resources; compliance with regulatory requirements and accreditation; effective management and administration of the research enterprise; efficient and effective knowledge mobilization and IP management. The examples from institutions provide evidence that RSF has made a partial contribution, but the evaluation was not able to quantify or assess the extent of this contribution beyond institutions’ own perceptions of the funding’s impact. Their perceived impact was very high and they reported that removing RSF funds would have a large negative impact on the research enterprise. Given the RSF program’s partial, albeit non-quantifiable, contribution to its intermediate outcomes, it can be inferred that the RSF program has also made a partial contribution to its final outcomes and the departmental result in SSHRC’s Departmental Results Framework. Specifically, RSF enables institutions to effectively use federal research funding from the three granting agencies, thereby contributing to a strong university research environment. The majority of institutions echoed this by stating that they agree or strongly agree that RSF has contributed to the intended final outcome, sustaining a strong research environment at their institution. Again, the extent of that contribution could not be assessed as part of the evaluation.

As part of assessing the program’s performance, an inconsistency was noted between the logic model and how the program works in reality. Specifically, the strength of the causality between the program’s immediate and intermediate outcomes has been over-emphasized in the program’s logic model. RSF is a grant that is meant to offset a portion of the indirect costs related to federally funded research and is not designed to fully support specific activities or projects. While the contributory nature is consistent with how the program is described in its Terms and Conditions, further adjustments are required to help frame communication of the program’s goals, as well as to frame further evaluation and performance monitoring activities.

3.1.1 Immediate outcome: RSF investment in the five expenditure categories

The logic model outlines five immediate outcomes: investment in research facilities; investment in research resources; investment to enhance the ability to meet regulatory requirements; investment in the management and administration of the research enterprise; and investment in the transfer of knowledge, commercialization and management of intellectual property.

Over the five-year evaluation period from 2013-14 to 2017-18, approximately $1.7 billion in RSF funding was distributed to 139 eligible institutions. The RSF funds were used by institutions to pay for a portion of the indirect costs they incur related to federally funded research in the five expenditure categories that relate to the intended immediate outcomes. Table 3 below shows the total RSF funding allocated by expenditure category/intended immediate outcome across the evaluation period.

From 2013-14 to 2017-18, the institutions funded by RSF used their grants mainly for management and administration of the research enterprise (34%) and research facilities (31%), followed by research resources (20%), enhancing the ability to meet regulatory requirements (9%), and transfer of knowledge, commercialization and the management of intellectual property (6%). Institutions’ allocation of the RSF funding over the five program expenditure categories was consistent over the evaluation period with annual fluctuations ranging between 0–3% in any category.

Table 3 Grant expenditures by expenditure category/intended immediate outcome (2013-14 to 2017-18)

| Investment in/to: |

2013-14 |

2014-15 |

2015-16 |

2016-17 |

2017-18 |

Total |

| Research facilities |

$103,691,852 |

$103,378,232 |

$101,793,323 |

$113,950,202 |

$110,650,764 |

$533,464,373 |

| Research resources |

$66,285,311 |

$64,522,113 |

$67,429,640 |

$70,436,589 |

$74,327,951 |

$343,001,604 |

| Management and administration of the research enterprise |

$111,621,183 |

$113,674,233 |

$118,367,027 |

$130,344,440 |

$123,607,935 |

$597,614,818 |

| Enhance the ability to meet regulatory requirements |

$28,146,568 |

$25,397,702 |

$32,827,263 |

$33,535,746 |

$31,299,017 |

$151,206,296 |

| Transfer of knowledge- commercialization and management of intellectual property |

$18,833,031 |

$16,693,156 |

$18,653,066 |

$18,653,613 |

$29,451,367 |

$102,284,233 |

| Total |

$328,577,945 |

$323,665,436 |

$339,070,319 |

$366,920,590 |

$369,337,034 |

$1,727,571,324 |

Source: Statement of Accounts 2013-14 to 2017-18

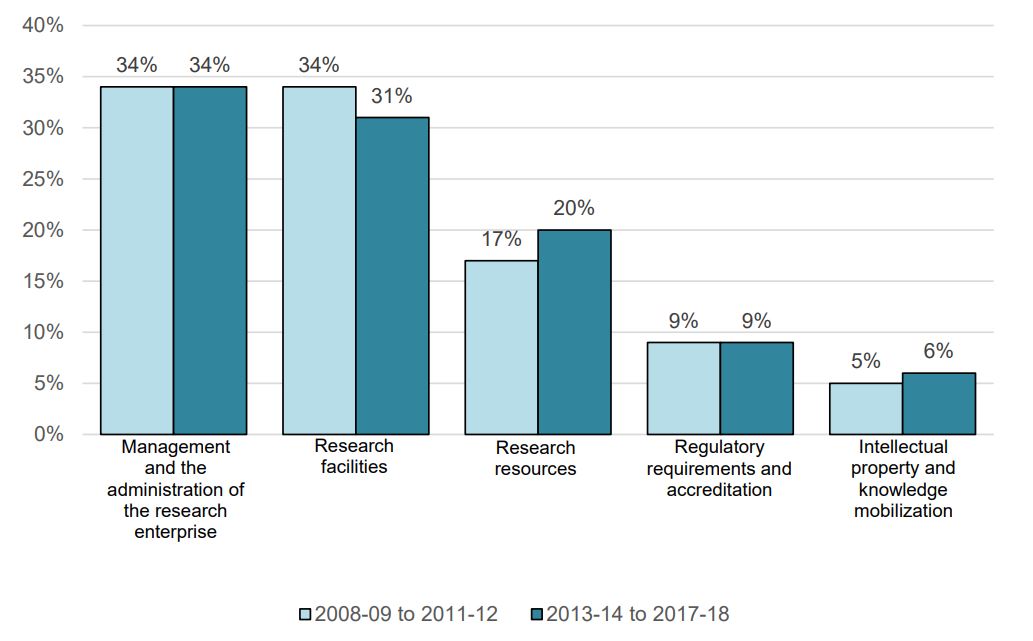

The distribution of funding has remained fairly stable over the past decade as shown by figure 1 that compares data from the previous evaluation to the current evaluation.

Figure 1 Institutions allocation of RSF funding (2008-09 to 2011-12 [N=125] vs. 2013-14 to 2017-18 [N=139])

Source: Statement of Accounts 2008-09 to 2017-18

Figure 1 long description

Figure 1: Institutions allocation of RSF funding (2008-09 to 2011-12 [N=125] vs. 2013-14 to 2017-18 [N=139])

| Expenditure category |

From 2008-09 to 2011-12 |

From 2013-14 to 2017-18 |

| Management and the administration of the research enterprise |

34% |

34% |

| Research facilities |

34% |

31% |

| Research resources |

17% |

20% |

| Regulatory requirements and accreditation |

9% |

9% |

| Intellectual property and knowledge mobilization |

5% |

6% |

Source: Statement of Accounts 2013-14 to 2017-18

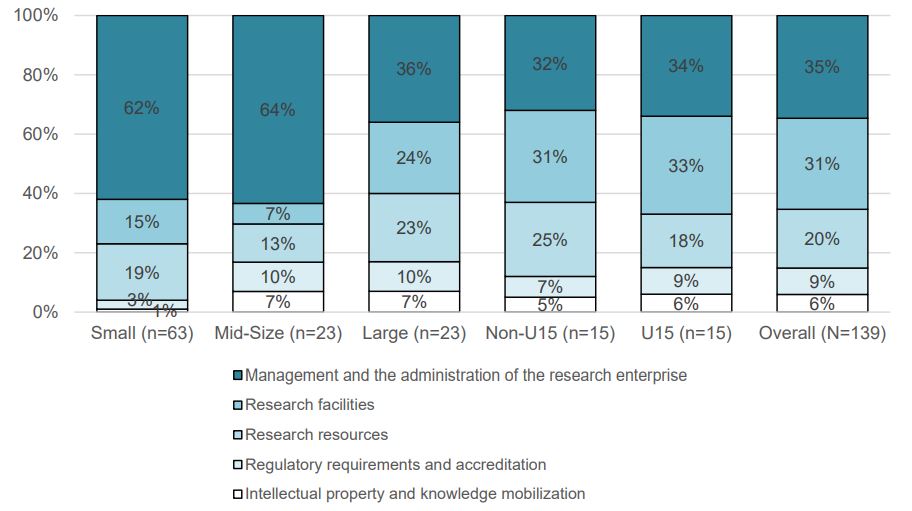

Different size institutions tend to allocate their funding differently to the five expenditure categories. Figure 2 below illustrates average RSF fund allocation by institution size between 2013-14 and 2017-18. The data show that over the five-year evaluation period, small and mid-size institutions generally allotted a larger share of their RSF funding to management and administration of the research enterprise than did large and research-intensive (U15 and non-U15) institutions. Meanwhile, large and research-intensive institutions directed a greater proportion of their funds to research facilities, research resources, enhancing the ability to meet regulatory requirements, and transfer of knowledge, commercialization and management of intellectual property than did small and mid-size institutions.

Figure 2 Institutions allocation of RSF funding by institution size and expenditure category (2013-14 to 2017-18)

Source: Statement of Accounts 2013-14 to 2017-18

Figure 2 long description

Figure 2: Institutions allocation of RSF funding by institution size and expenditure category (2013-14 to 2017-18)

| Expenditure category |

Small

(n=63) |

Mid-Size

(n=23) |

Large

(n=23) |

Non-U15

(n=15) |

U15

(n=15) |

Overall

(N=139) |

| Intellectual property and knowledge mobilization |

1% |

7% |

7% |

5% |

6% |

6% |

| Regulatory requirements and accreditation |

3% |

10% |

10% |

7% |

9% |

9% |

| Research resources |

19% |

13% |

23% |

25% |

18% |

20% |

| Research facilities |

15% |

7% |

24% |

31% |

33% |

31% |

| Management and the administration of the research enterprise |

62% |

64% |

36% |

32% |

34% |

35% |

Source: Statement of Accounts 2013-14 to 2017-18

Criteria and Processes Used by Institutions for Allocating Funds

Allocating RSF funding is typically the responsibility of the vice-president Research (or equivalent), or the chief financial officer. In a small number of cases, allocation of RSF funding is done by research and finance management teams (jointly or separately) or by the vice-president Academic or faculty deans.

Criteria and process used by institutions to determine how much should be allocated, where and how varied between institutions, partly depending on size and organizational structure. Larger institutions are more likely to use established models and formulas, with varying levels of sophistication. Medium and smaller institutions typically allocate based on need, with some investing their RSF grants in fixed or stable areas, with limited variation. The following modes of allocation were identified, with some institutions combining more than one approach:

- Allocation based on historical spending/actual expenditures: institutions consider eligible costs or expenditures (or overall indirect costs and expenditures) and determine the percentage to be allocated for each category (pro-rata).

- Allocation based on annual needs/priority assessments: institutions have a yearly review mechanism to allocate to priority areas or specific upcoming or ongoing institutional projects. This can be based on consultations with various stakeholders.

- Allocation based on past practice: some institutions indicated they allocate RSF to the same categories or sub-categories of spending to support research; a few adjustments can be introduced on an as-needed basis.

- Allocation based on pre-established formula or allocation models: some research-intensive and large institutions have a defined model or calculation methodology.

- Allocation that is decentralized: some large and research-intensive institutions present a decentralized model (or a partially decentralized model) where all or part of the RSF grant is distributed to particular units/faculties/affiliates that then make more specific allocation decisions or inject RSF funds into their budgets.

Most large and research-intensive institutions often allocate RSF to an overall operating budget or to other entities, such as affiliates, faculties and other units. These institutions identified that tracking RSF expenditures in detail becomes difficult when the funds are then tied to an overall source of funds, such as a general operating budget. These institutions do not have a practice of identifying a source of funds (e.g., RSF) when making expenditures, nor are many of their financial systems set up to allow for this level of expenditure tracking. Medium and small institutions are better able to track RSF expenditures. This issue is further discussed in Section 4.2.

Institutions, through their own allocation strategies, have directed approximately $598 million to the management and administration of the research enterprise, $533 million to research facilities, $343 million to research resources, $151 million to enhance the ability to meet regulatory requirements and $102 million to the transfer of knowledge, commercialization and management of intellectual property between 2013-14 and 2017-18. Therefore, the intended immediate outcomes of making an investment in the five expenditure categories have successfully been achieved through the RSF.

3.1.2 Intermediate outcomes

The institution’s investments in the five expenditure categories are expected to contribute to the intended intermediate outcomes: facilities are well maintained and operated; relevant research resources are available; regulatory requirements and accreditation are complied with; research enterprise is efficiently and effectively managed and administered; knowledge mobilization and intellectual property management is efficient and effective.

Institutions were able to show how this investment contributed to the intermediate outcomes through self-reported qualitative examples, as described below. While limitations are inherent in all studies that rely on self-reported data, these data can provide deeper insight into how institutions view the RSF program’s contribution.

As part of assessing the program’s performance, an inconsistency was noted between the logic model and how the program works in reality. Specifically, the strength of the causality between the program’s immediate and intermediate outcomes has been over-emphasized in the program’s logic model. In fact, RSF is a grant that is meant to offset a portion of the indirect costs related to federally funded research: it is not designed to fully support specific activities or projects. While the contributory nature is consistent with how the program is described in its terms and conditions, further adjustments are required to help frame communication of the program’s goals, as well as to frame further evaluation and performance-monitoring activities.

While the RSF is not the only source of funding that institutions rely on to cover the indirect costs of research, the impacts that the institutions report indicate that the RSF has been making an important, if only partial, contribution achieving these intermediate outcomes.

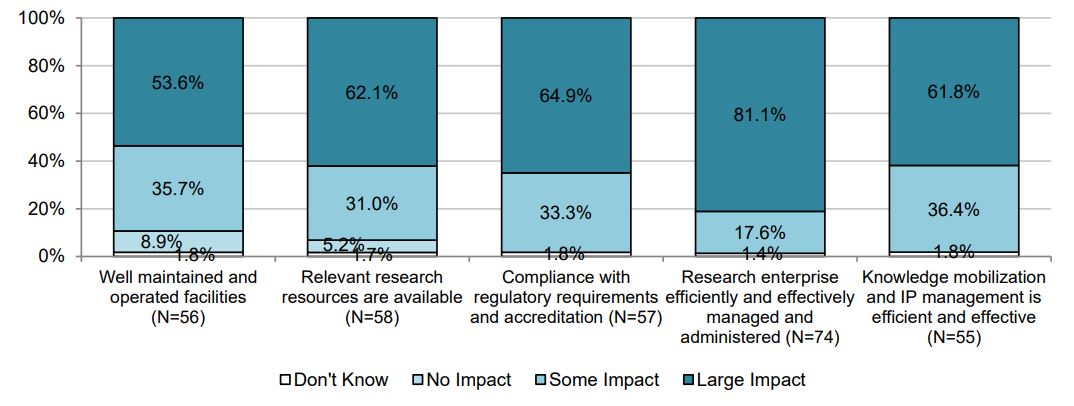

Figure 3 shows that most institutions surveyed indicated that the RSF had a large impact in their ability to have well-maintained and operated facilities (54%); ensure that relevant research resources are available (62%); efficiently and effectively manage and administer their research enterprise (81%); be in compliance with regulatory requirements and accreditation (65%); and efficiently and effectively manage knowledge mobilization and intellectual property (62%).

The variations seen in the level of impact show that, in the absence of RSF, not all institutions expect all eligible expense categories to be affected. This corresponds to the fact that some institutions allocate a greater proportion of their funding to certain categories (as shown in Figure 2 above). This variation also helps to alleviate concerns over self-reporting bias as not all responses were in the upper end of the scale (i.e., “large impact”).

Figure 3 Perceived impact on the intermediate outcomes

Source: Evaluation survey, 2018-19

Figure 3 long description

Figure 3: Perceived impact on the intermediate outcomes

| Impact category |

Well maintained and operated facilities (N=56) |

Relevant research resources are available (N=58) |

Compliance with regulatory requirements and accreditation (N=57) |

Research enterprise efficiently and effectively managed and administered (N=74) |

Knowledge mobilization and IP management is efficient and effective (N=55) |

| Don't know |

1.8% |

1.7% |

1.8% |

1.4% |

1.8% |

| No impact |

8.9% |

5.2% |

0.0% |

0.0% |

0.0% |

| Some impact |

35.7% |

31.0% |

33.3% |

17.6% |

36.4% |

| Large impact |

53.6% |

62.1% |

64.9% |

81.1% |

61.8% |

Source: Evaluation survey; 2018-19

The institutional representatives completing the evaluation survey did indicate that it was challenging to report on RSF impacts: not all institutions were able to provide a response to indicate a perceived level of impact in the absence of funding. As such, the response rate for the question on impact (57%) was lower than the overall evaluation survey response rate (78%). However, the contribution of RSF to each of the intermediate outcomes was described through a descriptive narrative provided by the institutions. The themes in each category are presented below.

“Our university has seen steady growth in the square footage of research laboratories (…) The RSF is critical to supporting this increasing space through provision of maintenance, upgrades, utilities, etc.” (U15 institution)

Well maintained and operated facilities

Over $533 million has been invested over the five-year period from 2013-14 to 2017-18 through the RSF to support well maintained and operated facilities. Just over half of institutions (52%) that allocated funds to this category reported that RSF funds are used to cover salary related to research facilities. Findings from the document review and evaluation survey show that RSF funds allocated to this category are also used to cover operating costs (most frequently), utilities costs, technical support, maintenance, general renovation and improvement, upgrades and maintenance of research equipment.

More specifically, respondents indicated that RSF funding helps with: growth, renovations and upgrades of facilities, research spaces and installations; new acquisitions of materials; maintenance work and emergency repairs of specialized equipment; and maintenance or technical staff salaries.

“RSF grant supports the small-scale emergency repair and operation of specialized research equipment. The grant also partially supports salaries of trained technicians to support, maintain and service the research lab equipment.” (Medium institution)

When institutions were asked about the impact of RSF funds on well maintained and operated facilities (i.e., what would happen in the absence of RSF), responses were mixed. Among institutions that allocated funding to this category, 53% reported that RSF had a “large” impact and 36% reported it had “some” impact. Less than half (43%) of U15 institutions indicated a “large” impact, despite making the largest contribution to this category compared to the other institution types. As the overall scale of research facilities at these funded institutions is extremely large, it is likely that RSF covers only a small proportion of indirect costs associated with facilities, and that institutions’ assessment of impact is more conservative in this expenditure category. Nevertheless, the data indicates that RSF does make contribute to the maintenance and operation of research facilities.

Overall, qualitative responses provided by institutions during this evaluation indicated that RSF helps maintain and operate research facilities at institutions across Canada.

Relevant research resources are available

“RSF funding has helped to sustain the research-related collections in our library. Without RSF funding we would have had to significantly decrease electronic library research holdings.” (Large institution)

A total of $343 million has been invested from 2013-14 to 2017-18 to help ensure that relevant research resources are available. RSF funds allocated to this category are used for library resources for research (e.g., electronic journal subscriptions, interlibrary loans, library staff, etc.) and to maintain the level of access to resources when faced with various pressures. Half of the institutions surveyed indicated that they use RSF funds in this category to cover salary costs.

According to the document review and interviews during this evaluation, funds were also used for: improvements to electronic information resources; upgrades, maintenance and/or operations of libraries; research/statistical software acquisition; software development designed for student and faculty open access publications; and insurance for research equipment. RSF contributed to the development and maintenance of complex telecom infrastructures, typically for larger institutions. RSF also facilitated the acquisition of resources, supported full-time equivalent and contributed to the development of tools.

In the evaluation survey, 62% of institutions that allocated funding to this category indicated it had a “large” impact; 31% reported it had “some” impact. Most non-U15 research intensive (86%) and large institutions (81%) indicated that RSF had a “large” impact on the availability of relevant research resources in the past year (this is consistent with the fact that those two groups of institutions allocate the most RSF funding to this category). Responses for U15 and mid-sized institutions are mixed, split between “some” (U15: 50%; mid-size: 46%) and “large” (U15: 36%; mid-size: 36%) impact. Of three small institutions that allocated to this category, one selected “some” impact and the two others selected “large” impact.

Overall, qualitative responses provided by institutions for the current evaluation indicate that RSF helps institutions provide access to research resources. As was the case with research facilities, the impact under this category is greater for large and research-intensive non-U15 institutions, which more frequently allocate to this category and allocate a larger proportion of their grant to it.

Compliance with regulatory requirements and accreditation

“The RSF contributes salary support for expert staff members facilitating ethically compliant research in both the Research Ethics Office and the Animal Care division” (Large), “Funds are used towards salaries of staff working with compliance issues; funds were also used towards bio safety compliance.” (Medium institution)

Over $151 million has been invested through the RSF over the period from 2013-14 to 2017-18 to contribute to institutional compliance with regulatory requirements and accreditation. Frequent uses for RSF under this category relate primarily to the activities of ethics and oversight boards or programs, and to payment of salaries for technicians, administrators and specialized staff. Most institutions (86%) use funds in this category to cover salaries.

RSF funds in this expenditure category were used to increase capacity or develop new areas. Other reported uses include: installation or maintenance of IT systems to monitor, manage and report on compliance; training and workshops on regulatory requirements; and lab upgrades to meet compliance.

“The Research Support Fund enables us to meet regulatory and accreditation requirements, including costs related to the operations of the Research Ethics Board and Animal Care Committee, and paying a fee-for-service to a veterinarian for Animal Care Committee involvement and potential veterinary care services.” (Small institution)

Overall, about 65% of institutions surveyed that allocated funding to this category in 2017-18 indicated that RSF funding had a “large” impact; 33% reported it had “some” impact.

By supporting institutional functions such as ethics boards, the RSF is contributing to institutions’ compliance with regulatory and accreditation requirements. These findings are consistent with previous evaluations that also found that RSF contributes to institutions’ ability to meet regulatory requirements, namely in supporting and improving ethics review processes.

Research enterprise efficiently and effectively managed and administered

“Our research services currently employ two professionals, a technician and an administrative agent. Without RSF, we would have to cut those resources.” (Medium institution)

The largest investment of the RSF over the evaluation period has been in the management and administration expenditure category. A total investment of over $597 million has been used to help ensure that the research enterprise is efficiently and effectively managed and administered.

RSF funds allocated to this category were commonly used to cover salaries for personnel in administrative services, namely to assist researchers in preparing, submitting and administering grants. Several institutions clearly expressed that they would not be able to, or would struggle to keep on certain members of staff without RSF.

RSF funding has also been used in departmental restructuring; research planning and promotion; and procurement. According to interview responses, funding in administration and management can also support the four other expenditure categories (e.g., paying for the salary of administrative personnel who provide support in multiple areas, for smaller institutions).

As part of the evaluation survey, institutions were asked whether RSF funding was allocated “toward implementing equity, diversity and inclusion objectives” for fiscal year 2017-18. About 30% of respondents indicated that was the case, most commonly among non-U15 research intensive (57%), U15 (36%) and large (33%) institutions. However, for institutions that allocate RSF funding to an overall operational budget and do not track RSF use at the sub-category level, it was difficult to determine whether funds were used for EDI or not. Institutions that reported to have allocated RSF funding to implement EDI objectives generally indicated that RSF funds were used to support administrative, human resources (HR) and other functions that have elements of EDI incorporated in their mandate or activities (e.g., because they operate under an institutional EDI plan or other EDI framework). Other examples of uses included: development of/support for implementation of institutional/academic EDI plans; inclusion policy and/or framework; support to researchers to integrate EDI considerations in forming their teams (e.g., EDI training); and hiring of an equity officer.

Institutions that reported they did not allocate RSF to implementing EDI indicated that EDI responsibilities fall under functions and units that are funded independently of RSF (e.g., HR, office of human rights and equity, base-funded positions, function supported by other specialized funding). However, several institutions that selected "no", indicated that EDI-related RSF investment is planned, underway (e.g., RSF funds used for 2018-19) or expected in the short term. A few institutions indicated that their RSF grant is too small to be used in this area.

According to survey findings for 2017-18 (and in accordance with findings from previous outcome reports), reported impact in management and administration is very high, the strongest of all five expenditure categories. Overall, 81% of institutions (including all large institutions) indicated RSF had a “large” impact in this category for the previous year. Most mid-sized (95%) and small (67%) institutions also selected “large” impact.

Knowledge mobilization and IP management is efficient and effective

“The Research Support Fund grant is used to cover costs related to providing training and support for faculty, as well as for research and academic staff, with respect to understanding knowledge mobilization and IP management, as well as any operating costs of the Research Office with respect to knowledge mobilization and IP management of research agreements and memoranda of understanding with communities or agencies.” (Small institution)

Approximately $102 million of RSF grants were invested in the transfer of knowledge—commercialization and management of intellectual property between 2013-14 and 2017-18. This investment helps ensure that knowledge mobilization and IP management is efficient and effective. RSF enabled institutions to provide support for new inventions, acquire licenses and patents; establish and maintain a technology transfer office; cover memberships for IP and tech/commercialization networks; and administer partnerships. According to the 2017-18 evaluation survey, 80% of respondents indicated that RSF covers salary costs under this category, most frequently for research-intensive institutions.

Some institutions use RSF funds to: support specialized staff or dedicated units who play a liaison role between researchers and partners, or experts in intellectual properties; facilitate partnerships with industries; and cover patent fees, legal counsel and training with regards to intellectual properties. RSF also covered costs associated with intellectual property or information transfer and covered training and operating costs that relate to intellectual property and knowledge mobilization within the broader research function.

When asked about the impact of RSF in knowledge mobilization and IP management, 62% of respondents indicated it had a “large” impact and 36% indicated it had “some” impact. In the evaluation survey, respondents from non-U15 research-intensive institutions and large institutions tended to respond that it had a “large” impact. U15 and mid-sized institutions mostly reported it had “some” impact.

3.1.3 Final outcomes—Contribute to a strong Canadian research environment

“Staff from the Institutional Programs division are embedded in Research Services. This team supports institutional programs such as CFI, CRC, CERC and other large tri-council grants. As this team supports the research community in both pre- and post-award activities, there is continuity in the support of these large initiatives and reduces the administrative burden to the researchers.” (U15 institution)

The Research Support Fund, in conjunction with other sources of direct and indirect support to research, is expected to optimize the use of federal direct research funding and contribute to the competitiveness of Canadian institutions on the world stage. This will ultimately result in a contribution to a strong Canadian research environment. It should be noted that this logic model’s intended final outcome is directly tied to SSHRC’s intended departmental outcome, “Canada’s university and college research environments are strong.”

“RSF funds are used annually to support positions within the research accounting team within finance. These positions are responsible for annual reporting to the tri-council, thereby relieving this task from researchers or staff within faculties. Having funding to centralize this function allows for efficiency and effectiveness as the staff develop expertise at a level that a distributed model would not allow for as staff would have many other tasks not just research support and administration.” (Research-intensive, non-U15 institution)

Optimizing the use of federal direct research funding and its contribution to the competitiveness of Canadian institutions on the world stage were not directly measured during this evaluation, given the expected limitations in quantifying RSF’s contribution (i.e., RSF only contributes to defraying the indirect costs of research and so long-term outcomes are heavily influenced by external factors beyond the scope of this evaluation).

The RSF has contributed to these outcomes in several ways. The investment in indirect costs, which cover many support functions (including technical support of equipment, grant application and administration, the provision of library infrastructure and services, human resources management and facilitation of the disclosure of research results) removes these functions from researchers, allowing them to focus on their projects, many of which are funded directly through federal research grants. Moreover, some institutions reported investing in the development of scholarly journals for the purpose of dissemination, which allows students and faculty to easily publish their research. Such dissemination of research enhances access to this research by other researchers, thus strengthening the Canadian research environment. Furthermore, the opportunity provided to students to publish their research gives them early-career advantages and greater opportunity to conduct impactful research in the long term. In addition, training and workshops supported through RSF funding also maintain and increase the research knowledge.

These findings are consistent with previous evaluations that noted RSF’s importance in supporting the preparation of research proposals and funding applications, and in generally reducing the administrative burden on researchers, in turn positively affecting research productivity.

The transitional reporting tool (described in section 4.2) asked institutional representatives to what extent they agree that the RSF has contributed to sustaining a strong research environment. Representatives from the majority of institutions (93% in 2015-16, 99% in 2016-17) indicated that they agree or strongly agree that RSF has contributed to sustaining a strong research environment at their institution. Institutions were also asked in 2015-16 and 2016-17 to provide one example of how RSF contributed to sustaining their research environment. The majority of impacts noted were categorized under management and administration of research, more specifically regarding HR and payroll. This is aligned with the fact that most institutions allocate a large proportion of their RSF funds to this expenditure category. For smaller institutions (who represent almost half of recipient institutions), this is the primary expenditure category.

SSHRC’s performance in relation to its intended departmental outcome—Canada’s university and college research environments are strong—is outlined in the table below. Two of the departmental result indicators reference the same investment indicators that have been described earlier in this report (investment in research facilities and investment in management and administration). This evaluation explored these indicators further (in section 3.1.2) to gain an understanding of how these investments are being used by the institutions to show how they contribute to intermediate and long-term outcomes. The departmental results also include a macro indicator: the average number of Canadian institutions among the top 250 of international university rankings. The number of Canadian institutions among the top 250 demonstrates the competitiveness of Canadian institutions on the world stage. All university rankings take into consideration several components of a university’s teaching and research activities. Given that research performance and productivity are key components of a university’s overall performance in the rankings, the number of Canadian universities appearing in the world rankings can be attributed to the support for the direct and indirect costs of research provided through tri-agency programs. The RSF contributes to this macro indicator as it is the only tri-agency program designed solely to support indirect costs of research. In conclusion, the RSF is contributing to a strong Canadian research environment.

Table 4 Departmental result framework

| Departmental Results |

Departmental Result Indicators |

Target |

Date to achieve target |

2015-16 Actual results |

2016-17 Actual results |

2017-18 Actual results |

| Canada’s university and college research environments are strong |

Total percentage of funds invested in research facilities |

25%-35% |

March 2020 |

30% |

30% |

30% |

| Total percentage of funds invested in management and administration |

30%-40% |

March 2020 |

35% |

35% |

33% |

| Average number of Canadian institutions among the top 250 of international university rankings |

Min. 10 |

March 2020 |

12 |

11 |

11 |

Source: Social Sciences and Humanities Research Council 2019-20 Departmental Plan

3.2 Efficiency and Economy

Conclusion:

Evaluation Question: Has the RSF continued to be delivered in a cost-efficient manner?

The RSF program has a low operating ratio that has decreased over time. The operating costs of the RSF were, on average, 13¢ for every $100 in grant expenditures. This suggests that the program has continued to be delivered in a cost-efficient manner. Concerns were raised, however, about the sufficiency of resources for program administration, making it challenging to allocate sufficient resources to change management, stakeholder engagement and improved performance monitoring. Concerns were also raised about the efficiency of the process used to calculate grant amounts, which relies on each of the three agencies to provide the amounts of direct funding to TIPS.

3.2.1 RSF Administrative Expenditures Profile

The overall administrative costs for the RSF from 2013-14 to 2017-18 was $2,294,090, with the majority of expenditures recorded in the “Indirect and Direct Non-Attributable” category (57.2%). This includes general administrative costs incurred by the SSHRC, such as expenses generated by other divisions—Internal Audit and Corporate Affairs, for example—as well as the Common Administrative Services Directorate (CASD). This was followed by “Direct Salary,” which are salaries expended by TIPS (39.2%) and “Direct Non-Salary” (3.6%), which consists of costs for transportation, telecommunications, publishing, professional and special services, printing, etc. Table 5 presents the RSF administrative costs over the five-year evaluation period.

The amount of money allocated to the three categories did not vary much over time. While “Direct Salaries” increased by 25.5% from 2016-17 to 2017-18, this increase represents less than the cost of one FTE.

Table 5 Administrative Expenditures for the Research Support Fund

| Expenditures |

2013-14 |

2014-15 |

2015-16 |

2016-17 |

2017-18 |

Total |

| Direct Salary |

$164,136 |

$167,103 |

$171,407 |

$176,122 |

$221,047 |

$899,815 |

| Direct Non-Salary |

$28,970 |

$26,772 |

$17,081 |

$3,261 |

$6,412 |

$82,496 |

| Total Direct |

$193,106 |

$193,875 |

$188,488 |

$179,383 |

$227,459 |

$982,311 |

| Indirect and Direct Non-Attributable |

$282,777 |

$284,984 |

$252,533 |

$244,057 |

$247,428 |

$1,311,779 |

| Total |

$475,883 |

$478,859 |

$441,021 |

$423,440 |

$474,887 |

$2,294,090 |

Source: Social Sciences and Humanities Research Council—Awards Management, Compliance and Accountability unit

3.2.2 Cost-efficiency

A common measure of operational efficiency of grant programs is the ratio of operating expenditures to the total amount of grant funds awarded. This ratio represents the cost of administering $1 of grant funds. To assess changes in the program’s operational efficiency, a historical analysis was conducted of RSF and its predecessor, the Indirect Costs Program’s administrative expenditures for the fiscal periods from 2008-09 to 2017-18. This information is illustrated in table 6 below.

The table shows that RSF’s operating cost ranged between 11.5¢ and 14.3¢ per $100 of grant expenditures over the period of 2013-14 to 2017-18. On average over the period, this was approximately 13¢ per $100. Looking further back at the performance of ICP, a clear downward trend can be seen over the past decade. This is consistent with previous evaluations of the ICP, which also found that “the program is extremely cost-efficient” and that “the costs of administering the program are very low.”

Table 6 Program costs and cost efficiency of the RSF and ICP

| Fiscal Year |

Grants and Scholarship |

Operating Expenses |

Total Expenses |

Cents of operating expenses

per $100 RSF grants |

| 2017-18 |

$369,403,000 |

$474,887 |

$369,877,887 |

12.9 |

| 2016-17 |

$369,555,583 |

$423,440 |

$369,979,023 |

11.5 |

| 2015-16 |

$341,403,000 |

$441,021 |

$341,844,022 |

12.9 |

| 2014-15 |

$341,403,000 |

$478,859 |

$341,881,858 |

14.0 |

| 2013-14 |

$332,403,000 |

$475,883 |

$332,878,882 |

14.3 |

| 2012-13 |

$332,387,611 |

$551,108 |

$332,938,719 |

16.6 |

| 2011-12 |

$332,387,611 |

$593,472 |

$332,981,083 |

17.9 |

| 2010-11 |

$330,062,000 |

$599,407 |

$330,661,407 |

18.2 |

| 2009-10 |

$325,379,000 |

$546,411 |

$325,925,411 |

16.8 |

| 2008-09 |

$329,055,000 |

$655,549 |

$329,710,549 |

19.9 |

Source: Social Sciences and Humanities Research Council—Awards Management, Compliance and Accountability unit

When the results were discussed with program management, concerns were raised about the sufficiency of resources for program administration and the efficiency of the method used to calculate grant amounts. Specifically, program management was concerned about the vulnerability of the program to staffing changes, given the small size of the program team. These concerns were also brought up in the last evaluation of RSF and the 2009 audit.

Comments also highlighted that limited staff resources in the past had made it challenging to allocate sufficient resources to change management, stakeholder engagement and improved performance monitoring. It was also suggested that limited engagement with institutions at times when there was a change to the performance reporting tools may have negatively affected the relationships between TIPS and the institutions.

Finally, it was also noted that the process and formula used to calculate grant amounts was quite complex and some concerns were raised about the efficiency of this process, which relies on each of the three agencies to provide the amounts of direct funding to TIPS. However, the complexity of the processes was not assessed as part of the evaluation.

4.0 Performance Reporting

Conclusion:

Evaluation Question: How could performance information be collected (considering current challenges and barriers)?

A goal of the evaluation was to better understand why institutions have had difficulty with past performance reporting requirements introduced by TIPS and to identify possible approaches for performance monitoring that would both be acceptable to institutions and meet the needs of program management and government accountability requirements. While the consultations with the institutions helped shed some light on the former, it proved to be more challenging to reach a consensus on how performance information could be collected. The evaluation was able to suggest improvements to the existing reporting template based on what institutions indicated they could report on, but it is not certain that these improvements will meet the needs of program management and the government’s accountability requirements.

Specifically, in the consultations with universities, key reasons surfaced for why larger institutions, in particular, have previously resisted reporting on data intended to quantify the intermediate outcomes. Large and research-intensive universities most often reported pooling their funds with other operating funds before allocating them to sub-categories, thereby losing the ability to link particular expenditures with RSF funds beyond the five expenditure categories. While they understood that they are accountable for the public funds they receive, they were concerned about the reporting burden. They explained that detailed tracking would require costly changes to their current accounting systems and significant staff training. In addition, many of the indicators collected in various reporting tools did not resonate with them as valid measures of success. From their perspective, spending resources to track non-valid measures did not seem to improve accountability.

That said, the evaluation was able to identify and suggest some indicators that the current reporting template could use. That included asking institutions to identify how and where they planned to allocate funds in the eligible expenditure categories; indicate whether the RSF grant supported salaries in each of the eligible expenditure categories; and provide qualitative examples of ways in which the RSF contributed to the research environment within each of the eligible expenditure categories. Additionally, the program could ask institutions to indicate or approximate how RSF funds were used under each eligible expenditure category, using a list of pre-established options (the institution could check multiple options). This would provide a picture of investments made and could help track trends over time, without asking for specific metrics that would be of limited use.

A goal of the evaluation was to better understand why institutions have had difficulty with past performance reporting requirements introduced by TIPS and to identify possible approaches for performance monitoring that would meet the needs of program management, be acceptable to institutions and meet government accountability requirements. The policy requirements associated with performance reporting and a description of past performance reporting challenges are outlined below to provide context for the evaluation findings. The evaluation findings then highlight what type of performance information institutions resist reporting on and why, as well as what type of performance information they can easily report on. Based on an analysis of the final results from the evaluation survey, a few suggestions of what could be worthwhile for TIPS to pilot in the future were also made.

4.1 Requirements Associated with Performance Reporting and Alternative Perspectives

From a policy perspective, it is up to management of a program to decide what kind of performance information they need for managing and reporting on the program’s performance. The Directive on Results requires that program officials be responsible for ensuring that valid, reliable, useful performance data is collected and available for the following purposes:

- managing programs;

- assessing the effectiveness and efficiency of programs; and

- meeting the performance information requirements of Treasury Board of Canada submissions, evaluations and central agencies.

All these purposes are relevant to RSF.

RSF is a grant program and, as such, is also subject to the Policy on Transfer Payments (PTP). This policy indicates that while a grant is not subject to being accounted for by a recipient, the recipient may be required to report on results achieved. Such reporting requirements should be proportionate to the level of risks specific to the program, the materiality of funding and the risk profile of applicants and recipients. The PTP articulates a number of expected outcomes, which can be seen as principles to guide the management of such payments. Those of relevance to guide monitoring and reporting requirements are:

“Transfer payment programs are designed, delivered and managed in a manner that takes account of risk and clearly demonstrates value for money.”

“Administrative requirements on applicants and recipients, which are required to ensure effective control, transparency and accountability, are proportionate to the level of risks specific to the program, the materiality of funding, and to the risk profile of applicants and recipients.”

The RSF Terms and Conditions (T&Cs) from 2018 outline general expectations related to performance reporting under the program. Specifically, “All recipients are required to produce annual statements of account, outlining the disposition of funds. Institutions whose Research Support Fund grant exceeds $25,000 must communicate on a public-facing website how much funding their institution has invested in each of the five expenditure categories over the reporting period.” These institutions must also complete and submit the transitional outcomes report. The T&Cs do not, however, dictate what performance information has to be collected.

To offer some insight into what other approaches are used by research funders to report on indirect costs, the interviews with institutional representatives briefly explored if other funding programs have similar reporting requirements for indirect costs. The results suggested that there are many models for funding indirect costs—often referred to as overhead—for these other programs, and thus many approaches. Approaches included: overhead costs reported as one line item, often determined by applying a percentage of the overall grant; allowing a fixed percentage for overhead and then not having a requirement to report on overhead costs after approval; and reporting on pre-approved specific costs (e.g., equipment operations) to support the grant.

4.2 Challenges and Barriers Pertaining to Performance Reporting

Since the inception of the RSF program (previously ICP), it has been difficult to implement performance measurement that reflects what institutions say they can easily report on, as well as the needs of program management and government accountability requirements. Past program evaluations have also faced significant challenges tracing and quantifying the program’s outcomes, but it has been possible to partly mitigate these challenges by integrating multiple lines of inquiry from both qualitative and quantitative data sources. This allows for a performance story that shows that the program is contributing to defraying indirect costs associated with federal research funding. It has, however, been even more challenging to find a collection of simple performance measures that can convincingly speak to that contribution on an annual basis, again because it remains challenging to get institutions to report on expenditures in eligible sub-categories and to quantify intermediate outcomes.